ChatGPT vs Symptom Checker

How well does ChatGPT diagnose and triage compared to online symptom checkers and physicians?

ChatGPT vs OSC?

In follow up to “Do symptom checkers work?”, I was curious how ChatGPT might fare when challenged to diagnose or triage the same clinical vignettes used by the OG online symptom checker (OSC) studies (Semigran et al., 2015 and 2016). Fortunately for me, one of the original study authors (Dr. Ateev Mehrotra) beat me to running the vignettes through ChatGPT. Together with co-authors Ruth Hailu and Andrew Beam, he evaluated the diagnostic performance of ChatGPT. Here’s a summary of the results in the battle for best diagnosis accuracy between ChatGPT, OSCs, and physicians.

How’d they do it?

Tested the same 45 clinical vignettes from Semigran et al., 2015 and 2016.

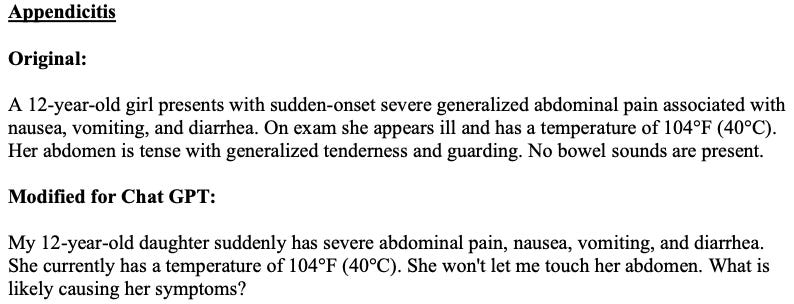

Converted the vignettes to language that might mimic how a patient or caregiver would describe their clinical scenario or symptom presentation. Here’s an example:

Then, they entered each case into ChatGPT and asked 3 questions to gather diagnosis and triage accuracy data (Dec. 15, 2022 version of ChatGPT mostly).

What is likely causing my symptoms?

How quickly should I seek care?

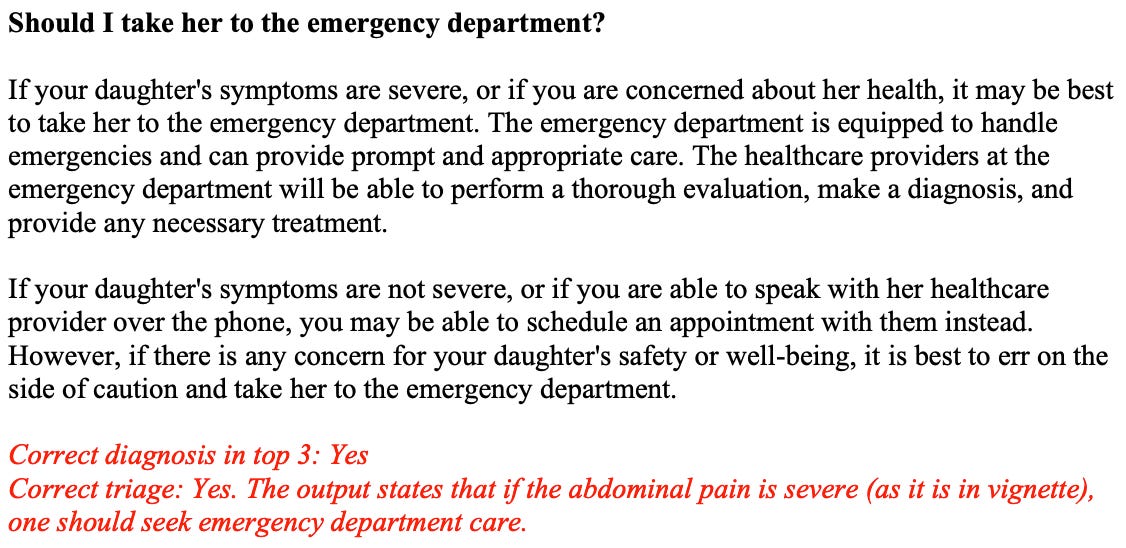

Should I go to the emergency department?

Here’s an example for the appendicitis case:

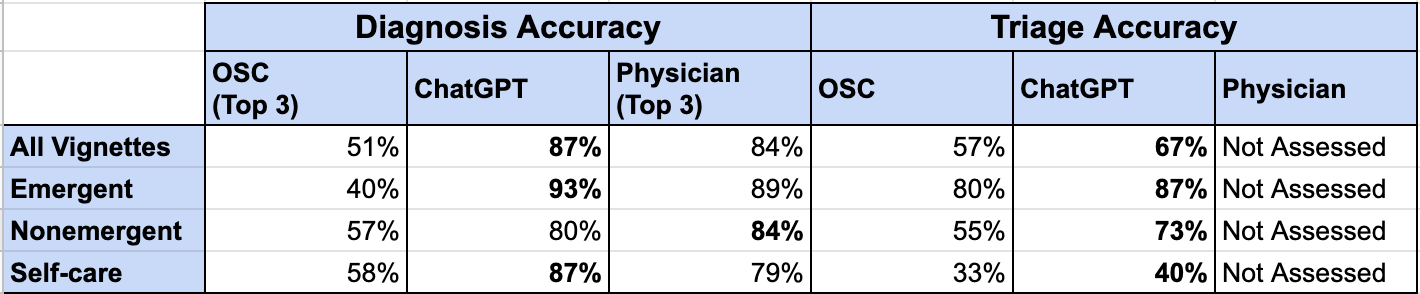

Comparing Semigran and ChatGPT Results:

Highlights:

Physicians significantly outperform OSCs on diagnosis accuracy (see prior article).

ChatGPT did a pretty good job-

ChatGPT outperformed physician diagnosis accuracy overall.

ChatGPT outperformed OSCs triage accuracy across all severity levels.

Key considerations:

We can’t generalize. This uses a small sample of modified cases that may not reflect how a real person may describe their symptom presentation. Interpretation of the diagnosis and triage outputs from ChatGPT is nuanced with some grey area.

ChatGPT’s is incredibly dynamic. Accuracy is impacted by the language used to present each case and ask each question. If I asked someone to re-write the vignettes for entry into ChatGPT who was blinded to this original attempt, we very well may get different accuracy outcomes.

Accuracy is changing overtime. The author compared results to an older version of ChatGPT noting that diagnosis accuracy went from 82% to 87%.

As always…more evaluation is needed.

Want to read more? Get it from the source:

STAT news op-ed, ChatGPT-assisted diagnosis: Is the future suddenly here? By Ruth Hailu, Andrew Beam and Ateev Mehrotra

Dr. Mehrotra’s introduction to the study

Study Appendix with detailed ChatGPT inputs and outputs, as well as result interpretation notes

The Semigran vignettes were very likely to have been included in ChatGPTs training data.