Do symptom checkers work?

What academic literature has to say about diagnosis & triage accuracy

What you’ll discover:

Online Symptom Checker (OSC) triage and diagnosis accuracy is generally low, but studies don’t capture the complexity of real world patients and their symptom checking habits. I have some suggestions on future study designs…

You might be surprised by the benchmarks on triage and diagnosis accuracy for nurses and physicians.

Continued AI model refinement holds promise in boosting performance. In the meantime, researchers call for development and evaluation standards.

Studies to know:

Semigran et al., 2015: First study to evaluate general medicine across the full triage spectrum using clinical vignettes. Many have gone on to copy this approach.

Semigran et al., 2016: Research letter follow up to the previous study testing the same clinical vignettes from 2015 directly on physicians. First study of its kind to directly compare physicians to OSCs.

Wallace et al., 2022: Most recent systematic review of OSC accuracy.

Painter et al., 2022: Viewpoint article that details study challenges and proposes 15 requirements for OSC evaluation standard.

Introduction

While many turn to Google first for health queries, it often leads to confusing, unsafe, and inaccurate information (El-Osta et al., 2022). OSCs aim to provide personalized and appropriate answers that are better than a search engine and hopefully at least as good as a clinician's assessment. Through a chatbot interface, the system asks questions to arrive at possible explanations for the symptoms. To do so, AI-powered OSCs may leverage epidemiologic data, bayesian statistics, and learned models. Over-triaging, under-triaging, and incorrect diagnosis information is risky and costly. So, just how good are OSCs at predicting the right assessment? Let’s explore approaches to clinical validation of symptom checkers, academic literature on their performance, and how those results stack up against gold standard benchmarks. We’ll wrap up with some thoughts for future work.

Current Evaluation Methods

GOLD STANDARD. The goal of a symptom checker is to produce a safe, accurate triage disposition and differential diagnosis. The computer’s results should be at least as safe and accurate as the existing gold standard– a clinician’s assessment. While there are proposed standards and frameworks for digital health validation, study methodologies for OSC accuracy vary (Mathews et al., 2019; NICE; Painter et. al., 2022). So, how do studies evaluate at present? There are two main approaches:

Clinical vignettes

Prospective clinical trials

CLINICAL VIGNETTES. A clinical vignette tells the story of a patient’s presentation representing a given clinical scenario or diagnosis. Collectively called “test cases”, the vignettes are either 1) simulated (cases fabricated to represent a typical or possible presentation of a diagnosis) or 2) retrospective (real-world cases of patients who had a clinician visit).

Here’s a sample clinical vignette from Semigran et al., 2015:

While there are no standardized requirements, they tend to include age, sex, symptoms, medical history, and other relevant features. Each case is labeled with a “correct” diagnosis, and often a corresponding triage level. Of note, cases may include physical exam, lab, and imaging data, but since OSC use is intended pre-visit, this information typically cannot be used towards triage or diagnosis prediction unless is it historical (ie. 18 year old female with headaches for 3 years found to have a normal MRI a month ago).

Simulated vignettes are usually authored by a clinician, but they may also be engineered in large batches “synthetically” by sampling epidemiologic data for a set of diagnoses (Wallace et. al., 2022). Both simulated and retrospective clinical vignettes are widely used to evaluate OSCs, but challenges with methodology and risk of bias knock down confidence in results.

Important limitations:

Authoring. Often written by clinicians, the stories don’t read the way a patient would describe their symptoms and medical history. Real-world complexity of authentic patients is often missing (Painter et. al., 2022). Typical use of an OSC may include symptom durations of just a few minutes, which is unlikely to be encountered in a standardized vignette.

Labeling correct diagnosis and triage. Labeling with a “correct” diagnosis and triage level is imperfect.

The vignettes describe clinician scenarios with a single target diagnosis, however the vignette may also reasonably approximate a diagnosis in the differential. For example, a patient that presents with a new cough and runny nose for two days could have a common cold or COVID-19.

While the extremes of the triage spectrum may be more readily objectively triaged, that is, 10 out of 10 clinicians are likely to correctly identify an obvious life or limb threatening emergencies, there is a “grey-zone” for non-urgent, semi-urgent, and urgent clinical scenarios. Different OSC may use as little as 3 or more than 8 “level” of triage describing the timing and site of care associated with the result. This can be further complicated by triage to specialty (dentist, physical therapist) or novel care type (asynchronous or synchronous telehealth).

Typically, the vignettes are labeled by one or more clinicians. They may undergo a review process for discordance of labels. Consensus discussion may allow for a final determination of the “correct” label (Painter et. al., 2022).

Testers. While entering a vignette’s data into an OSC, the tester may be required to make interpretations and assumptions or enter information that is not specified by the case (Painter et. al., 2022). In some studies, the authors have a conflict of interest by working for the OSC company (see Gilbert et al., 2020).

Ideal set. Building a set of test cases is a tricky balance without clear cut guidelines. How many total vignettes should there be? How many per triage level? How many per possible clinical scenario or target user type? An “ideal” sample would contain a large volume of real world cases that cover the full breadth, complexity of all possible triage levels, differential diagnoses, and target users. That's a tall order and perhaps overpowered in the grand scheme of an OSC evaluation strategy that should subsequently include a prospective clinical trial. What’s more, this collection can only be used for testing and not for training the model. Otherwise, there’s a risk of tuning the symptom checker to the specific vignette collection, which may artificially boost the performance at the risk of lower performance on a net new collection of cases. For this reason, multiple sets of cases must be generated for serial testing. Of course, in reality, establishing multiple ideal test case collections is challenging. For this reason, most studies use simulated or synthetic test case collections as opposed to real world cases (Wallace et. al., 2022). In addition, because “half of health information searches are on behalf of someone else”, I think testing should include vignettes of a caregiver reporting on someone else’s behalf (Pew Internet, 2013).

PROSPECTIVE CLINICAL STUDY. In prospective cross-sectional study, a patient uses the OSC at the point of care, just prior to seeing a medical provider. The OSCs’ results are compared to real-world clinician’s assessment. Several prospective cross-sectional studies have been conducted, but they are small, limited to testing one or two OSCs, and typically evaluate cases contained to a single medical specialty rather than broad coverage diagnoses and triage (Wallace et. al., 2022).

While they are steps in the right direction, clinical vignette studies and existing prospective clinical trials don’t provide us enough information to confidently understand how well symptom checkers work in the wild. Experts call for the adoption of an evaluation methodology that uses real-world vignettes that capture the complexity of actual patients and prospective, comprehensive clinical studies to evaluate OSCs in the real-world setting (Painter et. al., 2022; Wallace et. al., 2022).

Measures of success

Technical and clinical evaluation of a symptom checker will typically focus on the safety and accuracy of the triage and differential diagnosis outputs. While definitions vary by study, metrics reported in the literature may include:

Diagnosis accuracy: The correct diagnosis was listed by the symptom checker. Diagnosis accuracy may be reported as a match within the top 1 (primary), top 3, or even top 20 resulted diagnoses (Semigran et al., 2015; Gilbert et. al., 2020).

Triage accuracy: The triage resulted is equal to the correct label. That is, the triage is accurate if the OSC produces an emergency triage recommendation for test case labeled as an emergency. If multiple triage locations are suggested (for example, emergency department or primary care within 2 weeks), studies may use the most urgent suggestion to report a triage accuracy metric (Semigran et al., 2015).

Triage safety: Not commonly reported, but in one study is defined as a triage result that is a maximum of one level less conservative than the correct label (Gilbert et. al., 2020). For example, the outcome is safe if the OSC produces a recommendation of at least a same day evaluation for a vignette labeled an emergency. I’d argue that a better definition for triage safety is a triage result that is at least as conservative as the correct label.

Breadth of coverage: The OSC provides condition suggestions and levels of urgency for the vignette. There is a lack of coverage if an OSC will not allow entry of a case, for example a pregnant person or an infant cannot be assessed (Gilbert et. al., 2020).

Triage safety and accuracy arguably are more important than diagnostic accuracy; routing a patient to the correct site of care and with the correct urgency enables appropriate clinical management and reduction of morbidity or mortality. Recognizing a safety risk, OSCs tend to “over-triage” and often suggest intervention with a healthcare service along for conditions that may be self-managed (Painter et. al., 2022). Triage dispositions are more finite in nature compared with a large differential diagnosis. That is, triage results can be narrowed to just three categories (self-care, non-urgent, emergency), while a presentation of abdominal pain could encompass 20+ potential diagnoses.

Benchmarks: What’s “good” accuracy?

While many OSC studies directly compare to a clinician, these are good numbers to have in mind for provider accuracy in practice at large. Considering this data, perhaps a minimum target for OSC diagnosis accuracy would be >60%. Though, as a clinician, patient, or caregiver, achieving that number doesn’t convey much confidence!

Provider Triage accuracy and safety. A 2020 BMC study shows phone triage accuracy to be 66.3% for Registered Nurses and 71.5% for General Practitioners.

Provider Diagnosis accuracy. Studies report clinician diagnosis error rates between 5 to 15% (Graber et. al., 2005; Singh et al., 2014). And, by the way, that’s with access to medical history, physical exam, diagnostic labs and imaging, and access to expert consultation. A small study of 80 medical outpatients showed 76% physician accuracy in predicting diagnosis based on history alone (Peterson et. al., 1992). These studies do not segment by race or gender.

Diagnosis delays in race and gender bias. We know females and people of color have historically been significantly underrepresented in clinical research (Bierer et. al., 2022). Bias in the underlying data set will impact proper triage and diagnosis of stratified populations.

A 2019 analysis in Denmark, for example, found that in 72% of cases, women waited longer on average for a diagnosis than men (Westergaard et. al., 2019). A 2006 study showed African American women had a 1.39-fold odds (95% CI, 1.18-1.63) of delay by 2+ months in receiving a breast cancer diagnosis (Gorin, 2006).

Carefully developed algorithms do have the potential to mitigate risk of bias (Parikh et. al., 2019).

Studies to know

Vignette Study: Semigran et al., 2015

This 2015 BMJ study evaluated the accuracy of 23 online symptom checkers using 45 clinical vignettes. The cases were equally divided into 3 triage categories (emergent, non-emergent, self care reasonable) and spanned both common and uncommon conditions. This approach is helpful for comparing multiple OSCs at the same time, but doesn’t capture real-world complexity.

Conclusions:

OSCs had deficits in both triage and diagnosis. They provided the correct first diagnosis in 34% of vignettes and appropriate triage advice for only 57% of vignettes. OSCs performed decently well with Emergent triage at 80% correct.

OSC triage advice is generally risk averse, encouraging users to seek care for condition where self-care is reasonable

Limitation in not testing vignettes against physicians to understand how solvable the cases are by a human.

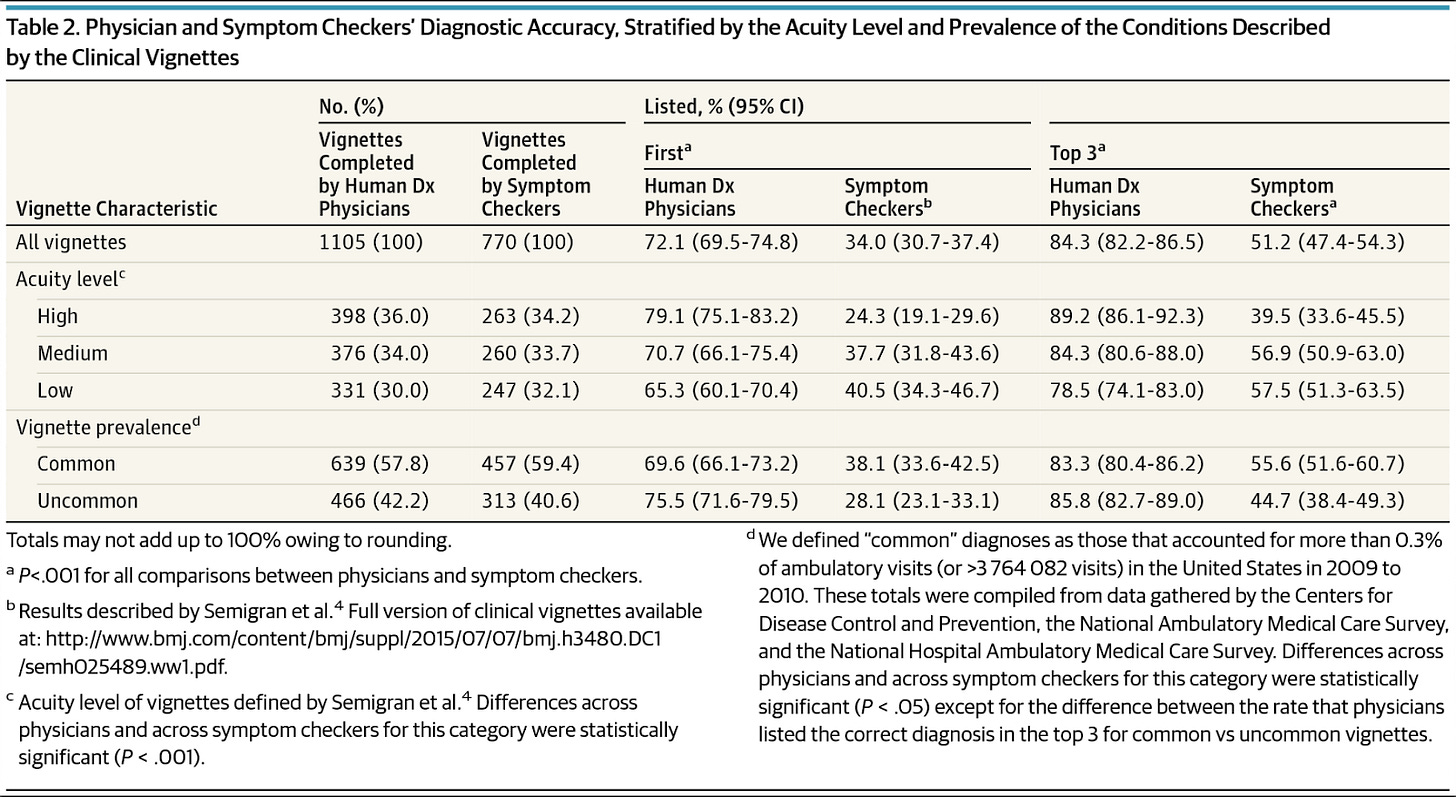

Vignette Study: Semigran et al., 2016

This builds upon the 2015 study by performing a direct comparison of the OSCs to physician on diagnostic accuracy of the original 45 clinical vignettes. This follow up was important so that we knew how human-solvable these vignettes really were.

Conclusions:

Physicians vastly outperformed the OSCs, 72% to 34% for first listed and 84% to 51% third listed diagnosis accuracy, respectively.

Physicians were more likely to list the correct diagnosis first for high acuity and uncommon vignettes compared to OSCs

Prospective Clinical Study: ????

I couldn’t find a great example to point you to here. The existing studies are of high or unknown risk of bias. They are narrow scope, examining a small area of medicine like hand clinic, inflammatory arthritis, or low-priority ED patients (see relevant studies listed in Wallace et. al., 2022). This provides neither a holistic understanding of performance nor a good match for the expected use case of an OSC (pre-visit google search).

Systematic Review: Wallace et al., 2022

This systematic review included 10 studies, but only one was considered to be of low risk bias (Semigran et. al., 2015). Researchers evaluated the accuracy of symptom checkers using a variety of medical conditions. Some studies were focused on one area of medicine while others covered general medicine. Half of studies recruited real patients and half were simulated cases.

Conclusions:

Primary diagnosis accuracy is low and varied among OSCs, raising safety and regulatory concerns.

Triage accuracy is generally better than diagnosis accuracy.

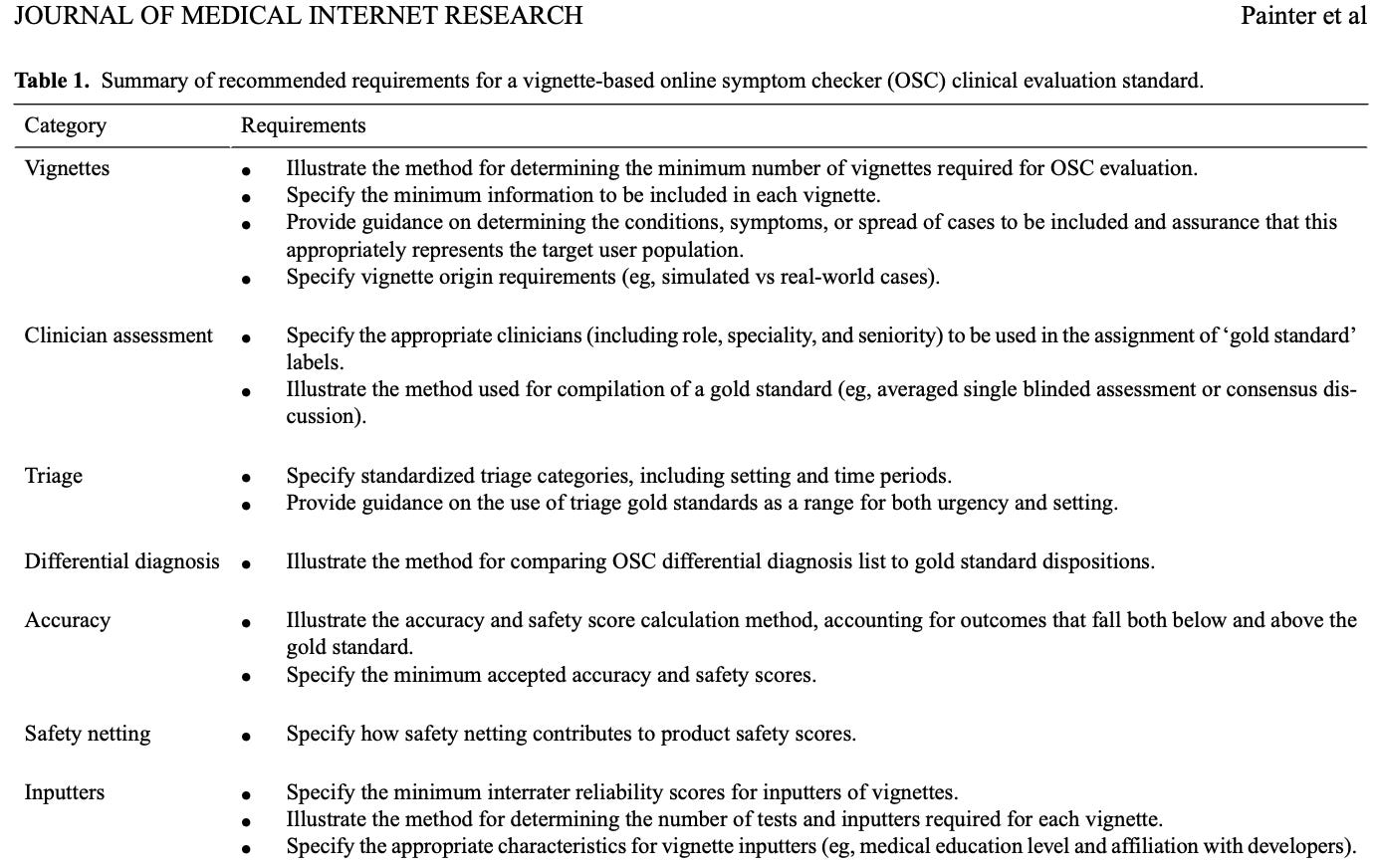

Evaluation Standard: Painter et al., 2022

This viewpoint article published in JMIR characterizes a set of requirements for a standardized vignette based clinical evaluation process of OSCs. This is important because following a standard will reduce discrepancies that can significantly influence variability in study outcomes.

Suggestions for a more realistic evaluation

I think the most important thing is that we capture an assessment of the intended or typical use of an OSC— that is, use prior to a provider visit. Bucketing pre-provider visit OSC use outcomes into three categories, you’ll see that existing study approaches are inadequate at assessing each real-world possibility. How might this be better assessed?

Self-Care: In this case, imagine you use the OSC and learn that your headache is caused by tension, which can be treated at home with ibuprofen. Considering the clinical vignette limitations we talked about above, and how current prospective clinical studies occur at the point of care, there is a gap in ideal evaluation here. We could set up a test group that uses the OSC and also calls into a nurse triage line to validate the triage disposition. The control group would receive no OSC intervention, but rather follow their normal process, logging their symptoms and self-triage determination; a nurse triage line would determine if the self-assessment was correct. It would also be interesting to ask participants to report their own longitudinal outcomes. For instance, at the time of symptom onset and 1 week post symptom onset, we could ask the participant to report on care seeking activity and diagnosis (self-diagnosed or provider confirmed).

Provider Visit: Imagine this tension headache is worsening and ibuprofen is not helping a bit. The OSC tells you to see an urgent care or primary care provider. To assess triage, I think it would still be important to compare to a nurse triage line. Next, I see two opportunities for diagnosis accuracy evaluation. We could compare the OSC to the treating provider’s assessment. Alternatively, we could compare the pre-visit OSC outcome to claims data. Intricacies in analyzing diagnosis codes (ICD-10), procedure codes (CPTs), the site of care, and temporal factors complicate determination of the correctness of the OSC outcomes. A control group similar to that described in the self-care evaluation could be used.

Emergency: Imagine that headache is the worse one of your life. It could be a brain bleed and mere seconds count. In this instance, symptom checker use would not be advisable. Hopefully, someone wouldn’t be googling/symptom checking in this clinical scenario and just calls 911. In a clinical trial, they may be instances of an emergency scenario presenting to the OSC that could be compared to nurse triage or emergency department claims data. That aside, I think using the existing clinical vignette evaluation approach makes the most sense.

Wrapping up:

Most studies to date use clinical vignettes or narrow scope clinical studies to determine triage and diagnosis accuracy of OSCs. Compared to physician diagnosis and triage accuracy, they generally show low triage and diagnosis accuracy. However, these study methodologies don’t speak well to how OSCs perform in the real world.

Future studies should examine real-world data comparing the standard use case for an OSC (replacing a pre-visit google search) with patient reported outcomes and/or clinician assessment.

Adoption of third-party evaluation processes, standard vignette based evaluation methodology, and protocols for real-world studies are needed.

References

NICE: Evidence standards framework (ESF) for digital health technologies. (n.d.). Retrieved December 21, 2022, from https://www.nice.org.uk/about/what-we-do/our-programmes/evidence-standards-framework-for-digital-health-technologies

Bierer, B. E., Meloney, L. G., Ahmed, H. R., & White, S. A. (2022). Advancing the inclusion of underrepresented women in clinical research. Cell Reports. Medicine, 3(4), 100553. https://doi.org/10.1016/j.xcrm.2022.100553

El-Osta, A., Webber, I., Alaa, A., Bagkeris, E., Mian, S., Taghavi Azar Sharabiani, M., & Majeed, A. (2022). What is the suitability of clinical vignettes in benchmarking the performance of online symptom checkers? An audit study. BMJ Open, 12(4), e053566. https://doi.org/10.1136/bmjopen-2021-053566

Gilbert, S., Mehl, A., Baluch, A., Cawley, C., Challiner, J., Fraser, H., Millen, E., Montazeri, M., Multmeier, J., Pick, F., Richter, C., Türk, E., Upadhyay, S., Virani, V., Vona, N., Wicks, P., & Novorol, C. (2020). How accurate are digital symptom assessment apps for suggesting conditions and urgency advice? A clinical vignettes comparison to GPs. BMJ Open, 10(12), e040269. https://doi.org/10.1136/bmjopen-2020-040269

Gorin, S. S. (2006). Delays in Breast Cancer Diagnosis and Treatment by Racial/Ethnic Group. Archives of Internal Medicine, 166(20), 2244. https://doi.org/10.1001/archinte.166.20.2244

Graber, M. L. (2013). The incidence of diagnostic error in medicine. BMJ Quality & Safety, 22(Suppl 2), ii21–ii27. https://doi.org/10.1136/bmjqs-2012-001615

Graversen, D. S., Christensen, M. B., Pedersen, A. F., Carlsen, A. H., Bro, F., Christensen, H. C., Vestergaard, C. H., & Huibers, L. (2020). Safety, efficiency and health-related quality of telephone triage conducted by general practitioners, nurses, or physicians in out-of-hours primary care: a quasi-experimental study using the Assessment of Quality in Telephone Triage (AQTT) to assess audio-recorded telephone calls. BMC Family Practice, 21(1), 84. https://doi.org/10.1186/s12875-020-01122-z

Mathews, S. C., McShea, M. J., Hanley, C. L., Ravitz, A., Labrique, A. B., & Cohen, A. B. (2019). Digital health: a path to validation. In npj Digital Medicine (Vol. 2, Issue 1). Nature Publishing Group. https://doi.org/10.1038/s41746-019-0111-3

Painter, A., Hayhoe, B., Riboli-Sasco, E., & El-Osta, A. (2022). Online Symptom Checkers: Recommendations for a Vignette-Based Clinical Evaluation Standard. Journal of Medical Internet Research, 24(10), e37408. https://doi.org/10.2196/37408

Parikh, R. B., Teeple, S., & Navathe, A. S. (2019). Addressing Bias in Artificial Intelligence in Health Care. JAMA, 322(24), 2377. https://doi.org/10.1001/jama.2019.18058

Peterson, M. C., Holbrook, J. H., von Hales, D., Smith, N. L., & Staker, L. v. (1992). Contributions of the history, physical examination, and laboratory investigation in making medical diagnoses. The Western Journal of Medicine, 156(2), 163–165.

Semigran, H. L., Linder, J. A., Gidengil, C., & Mehrotra, A. (2015). Evaluation of symptom checkers for self diagnosis and triage: audit study. BMJ, h3480. https://doi.org/10.1136/bmj.h3480

Semigran, H. L., Levine, D. M., Nundy, S., & Mehrotra, A. (2016). Comparison of Physician and Computer Diagnostic Accuracy. JAMA Internal Medicine, 176(12), 1860. https://doi.org/10.1001/jamainternmed.2016.6001

Singh, H., Meyer, A. N. D., & Thomas, E. J. (2014). The frequency of diagnostic errors in outpatient care: estimations from three large observational studies involving US adult populations. BMJ Quality & Safety, 23(9), 727–731. https://doi.org/10.1136/bmjqs-2013-002627

Wallace, W., Chan, C., Chidambaram, S., Hanna, L., Iqbal, F. M., Acharya, A., Normahani, P., Ashrafian, H., Markar, S. R., Sounderajah, V., & Darzi, A. (2022). The diagnostic and triage accuracy of digital and online symptom checker tools: a systematic review. NPJ Digital Medicine, 5(1), 118. https://doi.org/10.1038/s41746-022-00667-w

Westergaard, D., Moseley, P., Sørup, F. K. H., Baldi, P., & Brunak, S. (2019). Population-wide analysis of differences in disease progression patterns in men and women. Nature Communications, 10(1), 666. https://doi.org/10.1038/s41467-019-08475-9

Thanks for the outstanding review. I was the Chief Medical Officer for a startup (GYANT) that makes an online symptom checker, and I'm familiar with the literature you've cited above. We also came to the conclusion that triage safety and accuracy were always going to be more important than diagnostic accuracy, and so that's we focused the majority of our development and QA work. A few additional points we learned along the way

• There are also situations where it may be inappropriate for even a highly accurate symptom checker to disclose a high possibility of a life-changing diagnosis (i.e, pancreatic cancer or new HIV infection).

• The frequently cited Semigran papers are useful as a basis for evaluation methodologies, but some of the vignettes they published are poor choices for OSC testing - for instance, vignettes derived from case reports of rare presentations, or from "gotcha" board exam questions. We and our clinical advisors created our own library of hundreds of vignettes derived from common and important presentations seen in regular clinical practice, rather than rely on the Semigran ones.

• We were surprised how often our clinical advisors would review a vignette case (or a transcript of a real world case that went through our system) and come up with discordant opinions on appropriate triage levels. I remember a number of occasions where 3 reviewers would give us 3 different answers. There's a lot of grey area in medicine!